In today’s digital age, social media has become a central hub for communication, news, and self-expression. With billions of people sharing content across platforms every day, maintaining a healthy, safe environment has become a daunting task. The rapid surge in user-generated content brings with it a wave of challenges, from hate speech to misinformation, graphic material, and spam. To handle this immense flow, artificial intelligence (AI) is stepping in as a powerful solution for real-time, scalable content moderation.

The Growing Need for AI in Content Moderation

Social media companies must carefully monitor an overwhelming volume of posts, videos, and comments to detect harmful or inappropriate content. Traditional methods that rely heavily on human moderation are no longer practical on such a large scale. Human moderators can become overwhelmed by the volume and are often exposed to disturbing content, which can lead to burnout and psychological strain. On top of that, manual moderation is slow, leaving harmful content visible for too long. (The global content moderation services market size was estimated at USD 9.67 billion in 2023 and is expected to grow at a CAGR of 13.4% from 2024 to 2030. )AI-powered content moderation offers a faster, more efficient alternative. By leveraging machine learning (ML), natural language processing (NLP), and computer vision, AI can identify problematic content in real time, providing platforms with an automated way to ensure safer digital spaces.

How AI-Powered Moderation Works

AI content moderation uses advanced algorithms to detect and filter out harmful material such as violent images, hate speech, and disinformation. These systems are trained on massive datasets that help them recognize patterns in text, images, and videos. For example, NLP tools can analyze language to detect offensive or abusive comments, while computer vision technologies scan images and videos for inappropriate content.Machine learning allows these systems to improve continuously as they are exposed to more data, enabling them to adapt to evolving online behaviors and emerging trends. By doing so, AI can handle millions of posts and comments simultaneously, identifying harmful content faster than any human team could.

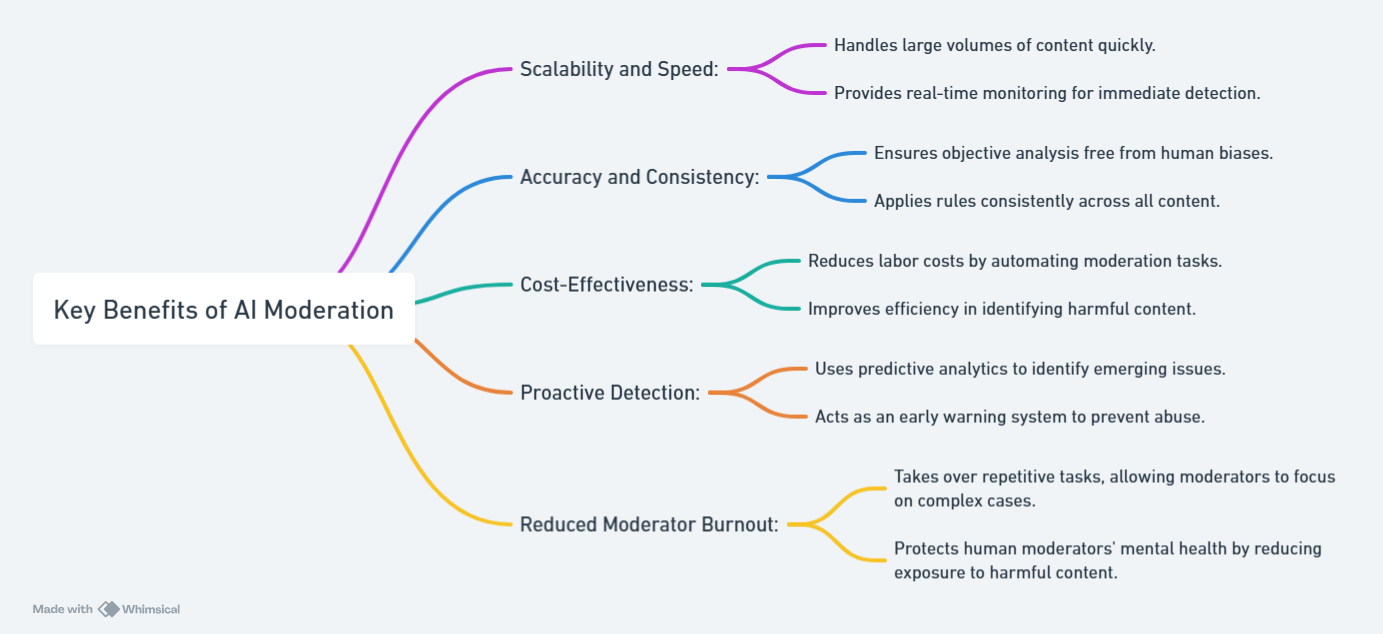

Key Benefits of AI Moderation

AI-powered content moderation provides several distinct advantages:

- Real-time detection: AI tools monitor social media content instantly, identifying violations and stopping harmful content from spreading.

- Massive scalability: AI can analyze vast amounts of data, making it ideal for platforms with millions or billions of users.

- Consistency and accuracy: AI enforces platform policies more consistently than humans, reducing bias and errors.

- Relief for human moderators: Automating obvious policy violations lets human moderators handle more complex, nuanced cases.

Key Technologies Used in AI-Powered Moderation

- Deep Learning: This technology helps AI systems learn from vast amounts of unstructured data, like text or images, to detect patterns in harmful content.

- Sentiment Analysis: AI can assess the emotional tone of a post to determine whether it might incite violence or harassment.

- Neural Networks: These systems imitate human brain functions, enabling the AI to understand and process complex content structures, such as recognizing harmful visual elements in images or videos.

Tackling Misinformation and Harmful Speech

One of the most pressing challenges for social media platforms is curbing the spread of misinformation. False information, whether related to health, politics, or social issues, can quickly go viral and cause widespread harm. AI helps combat this by flagging suspicious content for fact-checking or by cross-referencing claims with trusted sources.In addition, NLP tools are instrumental in identifying hate speech and toxic language. They analyze text not only for specific keywords but also for context, which allows them to detect coded or disguised harmful speech.

Filtering Graphic and Explicit Content

AI also plays a critical role in filtering out violent or explicit media. Computer vision technology can scan uploaded images and videos to detect inappropriate material like nudity, weapons, or violent acts. Platforms like YouTube and Facebook already rely on AI to scan and remove harmful videos before they are widely shared.

The Role of Human Moderation in an AI-Driven World

Although AI has made tremendous strides in content moderation, it is not a complete replacement for human judgment. AI systems can sometimes flag content incorrectly or miss subtleties that require a deeper understanding of context, culture, or language. To address this, many platforms use a hybrid model, where AI filters out the majority of harmful content, but more complex cases are escalated to human reviewers. This model ensures a balance between the speed of automation and the discernment of human judgment.

Ethical Considerations and Privacy Concerns

The use of AI in content moderation comes with its own set of challenges, particularly around ethics and privacy. AI systems must be transparent, fair, and free from bias. If not properly managed, these tools can disproportionately target certain groups or topics based on skewed data or flawed algorithms.Furthermore, social media platforms must be careful in handling the vast amount of data processed for content moderation. Privacy regulations such as GDPR require platforms to strike a balance between user safety and respecting individual privacy. It’s crucial that AI systems operate within these legal and ethical frameworks to ensure trust and fairness.

Future Directions for AI in Content Moderation

The future of AI in content moderation is full of promise. As AI systems continue to evolve, they will become even more adept at understanding the nuances of online content, from detecting sarcasm to spotting deepfake videos. Additionally, advancements in AI transparency will allow platforms to explain moderation decisions more clearly, enhancing trust between platforms and users.

Blockchain and other emerging technologies can enhance content moderation by offering transparent, immutable records of moderation decisions, enabling users to understand why their posts were flagged or removed.

Conclusion

AI-powered content moderation has revolutionized how social media platforms manage user-generated content. By automating the detection of harmful material, AI provides the speed, scale, and consistency necessary to maintain safer online environments. While complex cases still require human intervention, AI combined with human moderation creates an efficient, scalable solution for managing the vast amounts of content posted daily. As these technologies continue to evolve, AI’s role in content moderation will only grow, helping social platforms balance free expression with user safety.

FAQs

How to moderate AI-generated content?

AI-generated content is moderated by combining automated filters and human oversight. AI tools scan for harmful content using natural language processing (NLP) and image recognition, flagging violations for human moderators to review. This ensures content complies with ethical guidelines and community standards.

How can AI be used for social media?

AI can personalize content feeds, analyze user sentiment, moderate posts for inappropriate content, automate customer support via chatbots, and optimize ad targeting. It enhances user engagement, improves safety, and provides insights into trends.

What are the disadvantages of AI in content moderation?

AI struggles with context, leading to misinterpretations of satire or humor. It can also perpetuate bias from training data, over-censor content, and misunderstand cultural nuances. Additionally, over-reliance on AI can result in mistakes that require human intervention.

How does AI moderation work?

AI moderation uses algorithms to scan text, images, and videos for harmful content. It flags potential violations based on pre-set rules or machine learning models. Flagged content is either automatically removed or escalated to human moderators for review, ensuring accuracy.