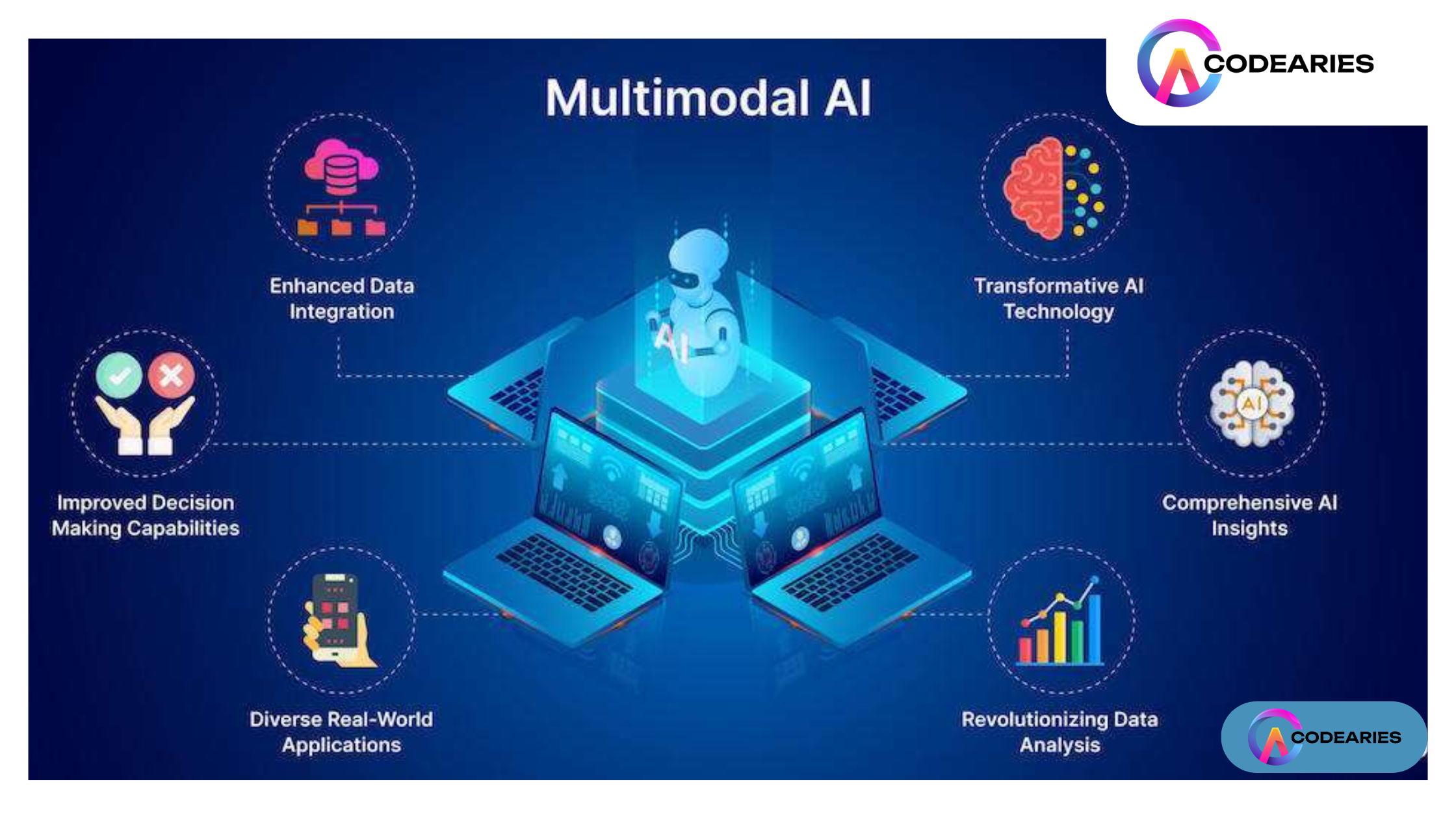

Multimodal AI: Enhancing Versatility in AI Systems through Diverse Data Integration

Introduction

Artificial Intelligence (AI) has made significant strides in recent years, and one of the most exciting developments is the rise of multimodal AI. By integrating different types of data—text, images, audio, and more—multimodal AI systems are set to revolutionize a wide array of applications, from virtual assistants to automated customer service. In this comprehensive blog, we’ll explore the key features, main focus areas, current and future trends, real-world case studies, and how CodeAries, an IT software development company, can assist in developing these advanced AI platforms.

Introduction to Multimodal AI

What is Multimodal AI?

Multimodal AI refers to the ability of AI systems to process and integrate multiple forms of data inputs, such as text, images, audio, and even video, to perform tasks more effectively. Unlike traditional AI, which typically focuses on a single type of data, multimodal AI leverages the strengths of various data types to create a more comprehensive and versatile understanding of information.

Importance of Multimodal AI

The integration of different data types allows AI to:

- Improve accuracy and reliability by cross-verifying information from multiple sources.

- Enhance user experience by providing more natural and intuitive interactions.

- Enable more complex and context-aware decision-making processes.

Key Features of Multimodal AI

1. Data Integration

Multimodal AI systems seamlessly integrate various data types to create a unified representation of information. This integration is crucial for tasks requiring context from multiple sources, such as understanding a scene in an image and the associated textual description.

2. Contextual Understanding

By combining data types, multimodal AI can achieve a deeper contextual understanding. For example, in customer service, analyzing both the textual content of a complaint and the tone of voice can provide better insights into customer sentiment.

3. Enhanced Interactivity

Multimodal AI enhances interactivity in applications like virtual assistants. For instance, a virtual assistant that can process voice commands, recognize faces, and understand text can offer a more seamless user experience.

4. Improved Decision-Making

The ability to draw from multiple data sources allows multimodal AI to make more informed and accurate decisions. This feature is particularly beneficial in fields like healthcare, where integrating patient records (text) with medical imaging (visual data) can improve diagnostic accuracy.

Main Focus Areas of Multimodal AI

1. Virtual Assistants

Virtual assistants, such as Amazon’s Alexa, Google Assistant, and Apple’s Siri, are becoming increasingly multimodal. They can process voice commands, display visual information, and provide textual responses, making interactions more intuitive and effective.

2. Automated Customer Service

Multimodal AI is transforming customer service by enabling systems to understand and respond to customer inquiries through multiple channels. For example, integrating chatbots with voice recognition and sentiment analysis can lead to more accurate and empathetic responses.

3. Healthcare

In healthcare, multimodal AI can combine electronic health records, medical imaging, and genetic data to offer comprehensive patient insights. This holistic approach can enhance diagnostics, treatment planning, and personalized medicine.

4. Autonomous Vehicles

Autonomous vehicles rely on multimodal AI to process data from cameras, LIDAR, radar, and GPS. This integration ensures safer and more reliable navigation by providing a complete view of the vehicle’s surroundings.

5. Content Creation

Multimodal AI is also making its mark in content creation. AI systems can now generate videos, write articles, and create artwork by combining visual and textual data, opening up new possibilities for creativity and automation.

Current and Future Trends

Current Trends

1. Cross-Modal Learning

Cross-modal learning involves training AI models to understand and generate data across different modalities. This approach is gaining traction as it improves the AI’s ability to learn from and respond to complex data inputs.

2. Real-Time Multimodal Processing

Real-time processing of multimodal data is becoming more feasible with advancements in computing power and AI algorithms. This trend is crucial for applications like autonomous driving and real-time translation services.

3. Multimodal Sentiment Analysis

Sentiment analysis that combines text and audio data to gauge emotions more accurately is being increasingly adopted. This approach is particularly useful in customer service and social media monitoring.

Future Trends

1. Enhanced Personalization

Future multimodal AI systems will offer even greater levels of personalization by integrating more diverse data sources. For instance, a virtual assistant could use biometric data alongside voice and text to tailor responses more precisely to individual users.

2. Expanded Application Areas

The application areas for multimodal AI will continue to expand, covering fields such as education, entertainment, and smart homes. These systems will become more ingrained in daily life, offering smarter and more intuitive interactions.

3. Ethical and Privacy Considerations

As multimodal AI systems become more sophisticated, there will be increased focus on ethical and privacy considerations. Ensuring that these systems are used responsibly and that data is protected will be paramount.

Real-World Case Studies

1. Google Assistant

Overview: Google Assistant is a prime example of a multimodal AI system. It integrates voice recognition, natural language processing, and visual data from devices like smart displays.

Key Features:

- Voice and text interaction capabilities.

- Integration with smart home devices.

- Visual responses on compatible devices.

Impact: Google Assistant’s multimodal capabilities provide a seamless user experience, allowing users to interact naturally with their devices and access information effortlessly.

2. IBM Watson in Healthcare

Overview: IBM Watson utilizes multimodal AI to improve healthcare outcomes. By integrating textual data from medical literature, patient records, and imaging data, Watson provides comprehensive diagnostic and treatment recommendations.

Key Features:

- Analysis of structured and unstructured data.

- Integration of medical imaging with patient records.

- Natural language understanding for medical literature.

Impact: IBM Watson’s multimodal approach enhances the accuracy of diagnostics and personalized treatment plans, leading to better patient outcomes and more efficient healthcare delivery.

3. Tesla Autopilot

Overview: Tesla’s Autopilot system leverages multimodal AI to enable autonomous driving. It integrates data from cameras, ultrasonic sensors, radar, and GPS to navigate and make driving decisions.

Key Features:

- Real-time data processing from multiple sensors.

- Contextual understanding of the driving environment.

- Autonomous decision-making capabilities.

Impact: Tesla’s multimodal AI system enhances the safety and reliability of autonomous driving, paving the way for a future of self-driving cars.

How CodeAries Can Help

Expertise in Multimodal AI Development

At CodeAries, we specialize in developing cutting-edge AI solutions that leverage multimodal data integration. Our team of experts is well-versed in the latest AI technologies and methodologies, ensuring that we can deliver high-quality, innovative AI systems tailored to your needs.

Custom Solutions for Diverse Applications

Whether you’re looking to develop a virtual assistant, an automated customer service platform, or an AI-driven healthcare solution, CodeAries can help. We offer custom AI development services that cater to a wide range of applications, ensuring that your AI system meets your specific requirements.

End-to-End Development Services

From initial consultation and requirement analysis to development, testing, and deployment, CodeAries provides end-to-end AI development services. Our comprehensive approach ensures that your AI project is executed smoothly and efficiently, delivering exceptional results.

Commitment to Ethical AI

At CodeAries, we are committed to developing ethical AI systems that respect user privacy and data security. We adhere to best practices in AI ethics, ensuring that our solutions are responsible and trustworthy.

Conclusion

Multimodal AI represents the next frontier in artificial intelligence, offering enhanced capabilities and more versatile applications by integrating diverse data types. From virtual assistants and automated customer service to healthcare and autonomous vehicles, the potential of multimodal AI is vast and exciting. As we move forward, the continued development and adoption of these technologies will lead to smarter, more intuitive, and more effective AI systems.

At CodeAries, we are dedicated to helping you harness the power of multimodal AI. With our expertise and commitment to excellence, we can assist in developing innovative AI solutions that meet your needs and drive your success. Contact us today to learn more about how we can help you navigate the future of AI.